Exploring Remote Resources

Look Around the Remote System

If you have not already connected to

cluster.name, please do so now:local$ ssh user@cluster.name

Take a look at your home directory on the remote system:

remote$ ls

What's different between your machine and the remote?

Open a second terminal window on your local computer and run the

ls command

(without logging in to cluster.name). What differences do you see?Most high-performance computing systems run the Linux operating system, which

is built around the UNIX Filesystem Hierarchy Standard. Instead of

having a separate root for each hard drive or storage medium, all files and

devices are anchored to the "root" directory, which is

/:remote$ ls /

bin etc lib64 proc sbin sys var boot home mnt root scratch tmp working dev lib opt run srv usr

The "home" directory is the one where we generally want to keep all of our files. Other

folders on a UNIX OS contain system files and change as you install new software or

upgrade your OS.

Using HPC filesystems

On HPC systems, you have a number of places where you can store your files.

These differ in both the amount of space allocated and whether or not they

are backed up.

- Home -- often a network filesystem, data stored here is available throughout the HPC system, and often backed up periodically. Files stored here are typically slower to access, the data is actually stored on another computer and is being transmitted and made available over the network!

- Scratch -- typically faster than the networked Home directory, but not usually backed up, and should not be used for long term storage.

- Work -- sometimes provided as an alternative to Scratch space, Work is a fast file system accessed over the network. Typically, this will have higher performance than your home directory, but lower performance than Scratch; it may not be backed up. It differs from Scratch space in that files in a work file system are not automatically deleted for you: you must manage the space yourself.

Nodes

Individual computers that compose a cluster are typically called nodes

(although you will also hear people call them servers, computers and

machines). On a cluster, there are different types of nodes for different

types of tasks. The node where you are right now is called the login node,

head node, landing pad, or submit node. A login node serves as an access

point to the cluster.

As a gateway, the login node should not be used for time-consuming or

resource-intensive tasks. You should be alert to this, and check with your

site's operators or documentation for details of what is and isn't allowed. It

is well suited for uploading and downloading files, setting up software, and

running tests. Generally speaking, in these lessons, we will avoid running jobs

on the login node.

Who else is logged in to the login node?

remote$ who

This may show only your user ID, but there are likely several other people

(including fellow learners) connected right now.

Dedicated Transfer Nodes

If you want to transfer larger amounts of data to or from the cluster, some

systems offer dedicated nodes for data transfers only. The motivation for

this lies in the fact that larger data transfers should not obstruct

operation of the login node for anybody else. Check with your cluster's

documentation or its support team if such a transfer node is available. As a

rule of thumb, consider all transfers of a volume larger than 500 MB to 1 GB

as large. But these numbers change, e.g., depending on the network connection

of yourself and of your cluster or other factors.

The real work on a cluster gets done by the compute (or worker) nodes.

compute nodes come in many shapes and sizes, but generally are dedicated to long

or hard tasks that require a lot of computational resources.

All interaction with the compute nodes is handled by a specialized piece of

software called a scheduler. We'll learn more about how to use the

scheduler to submit jobs next, but for now, it can also tell us more

information about the compute nodes.

For example, we can view all of the compute nodes by running the command

sinfo.remote$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST compute* up 7-00:00:00 1 drain* gra259 compute* up 7-00:00:00 11 down* gra[8,99,211,268,376,635,647,803,85... compute* up 7-00:00:00 1 drng gra272 compute* up 7-00:00:00 31 comp gra[988-991,994-1002,1006-1007,1015... compute* up 7-00:00:00 33 drain gra[225-251,253-256,677,1026] compute* up 7-00:00:00 323 mix gra[7,13,25,41,43-44,56,58-77,107-1... compute* up 7-00:00:00 464 alloc gra[1-6,9-12,14-19,21-24,26-40,42,4... compute* up 7-00:00:00 176 idle gra[78-98,123-124,128-162,170-172,2... compute* up 7-00:00:00 3 down gra[20,801,937]

A lot of the nodes are busy running work for other users: we are not alone

here!

There are also specialized machines used for managing disk storage, user

authentication, and other infrastructure-related tasks. Although we do not

typically logon to or interact with these machines directly, they enable a

number of key features like ensuring our user account and files are available

throughout the HPC system.

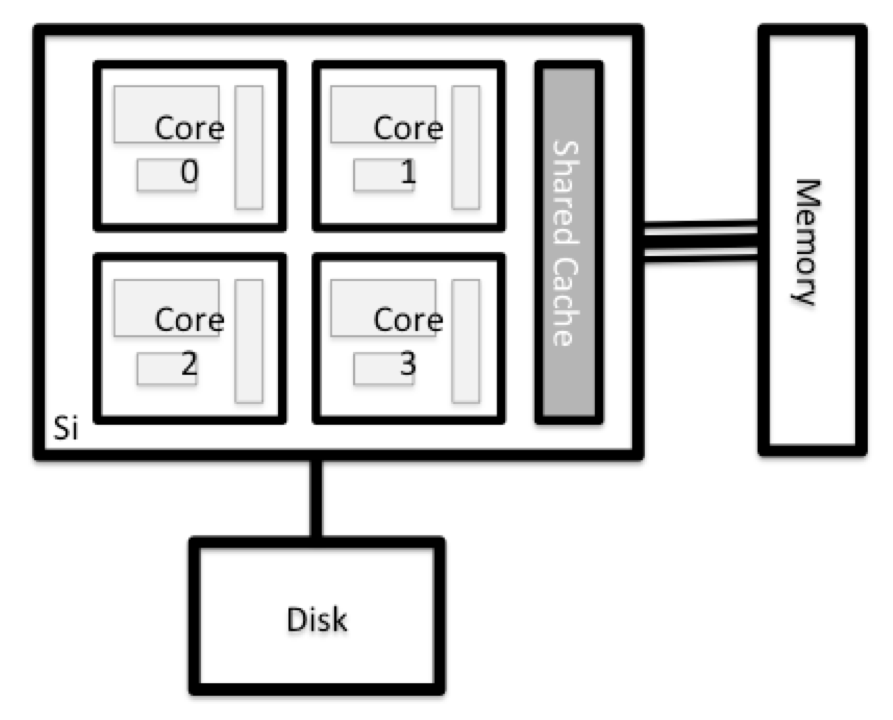

What's in a Node?

All of the nodes in an HPC system have the same components as your own laptop

or desktop: CPUs (sometimes also called processors or cores), memory

(or RAM), and disk space. CPUs are a computer's tool for actually running

programs and calculations. Information about a current task is stored in the

computer's memory. Disk refers to all storage that can be accessed like a file

system. This is generally storage that can hold data permanently, i.e. data is

still there even if the computer has been restarted. While this storage can be

local (a hard drive installed inside of it), it is more common for nodes to

connect to a shared, remote fileserver or cluster of servers.

Explore Your Computer

Try to find out the number of CPUs and amount of memory available on your

personal computer.

Note that, if you're logged in to the remote computer cluster, you need to

log out first. To do so, type

Ctrl+d or exit:remote$ exit local$

In a macOS environment, you can use the following to get the number of cpus and

amount of free memory

sysctl -n hw.ncpu vm_stat | awk '/free/ {getline; print "Free memory: " $3 * 4096 / 1048576 " MB"}'

In both Linux and macOS, you can use system monitors such as the built in

top,

or install htop using apt in Ubuntu or brew in macOS.local$ top local$ htop

Explore the Login Node

Now compare the resources of your computer with those of the login node.

Compare Your Computer, the Login Node and the Compute Node

Compare your laptop's number of processors and memory with the numbers you

see on the cluster login node and compute node. What implications do

you think the differences might have on running your research work on the

different systems and nodes?

Differences Between Nodes

Many HPC clusters have a variety of nodes optimized for particular workloads.

Some nodes may have larger amount of memory, or specialized resources such as

Graphics Processing Units (GPUs or "video cards").

With all of this in mind, we will now cover how to talk to the cluster's

scheduler, and use it to start running our scripts and programs!